If you put aside the agruments/debates on how it will affect employment, I think autonomous cars could drastically cut down on traffic deaths once the software and hardware are sufficiently evolved.

However, I was watching a recent episode of BBC's Click about them and it left me a bit perturbed.

Here's why:

In the article, they covered the death of a pedestrian by an Uber car in Arizona. Much of the blame lies with the 'safety driver', who was busy watching a TV show on her phone and failed to spot the pedestrian in time. But there's more. Comparisms between the autonomous car footage and normal dashcam footage taken on the same bit of road at the same time of night showed the Uber footage to be significantly below par. Why? Are Uber using sub-par components (perhaps to cut cost)? The viewing distance was much greater with the standard dashcam.

And there's more. Uber used a Volvo that had its own anti-crash software/hardware but it had been deliberately disabled by Uber because it conflicted with their own system, causing the car to jerk. Was this a case of the need for performance over-riding safety?

Here's a question for the lawyers among us. If I buy a car and deliberately disable the anti-crash system, then go on to kill a pedestrian, how liable am I? My own conscience tells me that I would be 100% liable but maybe that's just me.

Astonishingly (to me), Uber have been found to be not criminally liable for the woman's death. I would expect the safety driver to suffer the consequences of her negligence but I would have thought Uber must also bear some responsibility for disengaging a car manufacturer's safety system.

I am left to wonder if there is something political going on here - perhaps an attempt to try and keep the autonomous car industry out of the courts and therefore in a good light with consumers.

Whatever happens, many people are unhappy with the notion of driverless cars and this won't help dissuade them.

However, I was watching a recent episode of BBC's Click about them and it left me a bit perturbed.

Here's why:

In the article, they covered the death of a pedestrian by an Uber car in Arizona. Much of the blame lies with the 'safety driver', who was busy watching a TV show on her phone and failed to spot the pedestrian in time. But there's more. Comparisms between the autonomous car footage and normal dashcam footage taken on the same bit of road at the same time of night showed the Uber footage to be significantly below par. Why? Are Uber using sub-par components (perhaps to cut cost)? The viewing distance was much greater with the standard dashcam.

And there's more. Uber used a Volvo that had its own anti-crash software/hardware but it had been deliberately disabled by Uber because it conflicted with their own system, causing the car to jerk. Was this a case of the need for performance over-riding safety?

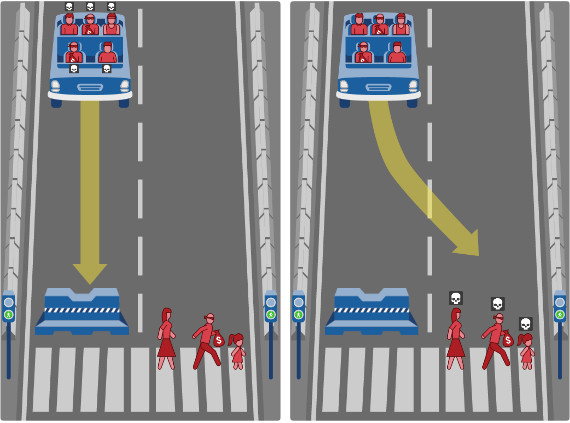

Here's a question for the lawyers among us. If I buy a car and deliberately disable the anti-crash system, then go on to kill a pedestrian, how liable am I? My own conscience tells me that I would be 100% liable but maybe that's just me.

Astonishingly (to me), Uber have been found to be not criminally liable for the woman's death. I would expect the safety driver to suffer the consequences of her negligence but I would have thought Uber must also bear some responsibility for disengaging a car manufacturer's safety system.

I am left to wonder if there is something political going on here - perhaps an attempt to try and keep the autonomous car industry out of the courts and therefore in a good light with consumers.

Whatever happens, many people are unhappy with the notion of driverless cars and this won't help dissuade them.